Nanopublication: RAD7KegvZc

Full identifier: https://w3id.org/np/RAD7KegvZcc3ijwC1_FPjt6CP7t5p-sTMbQCMXoELfOqU

Summary

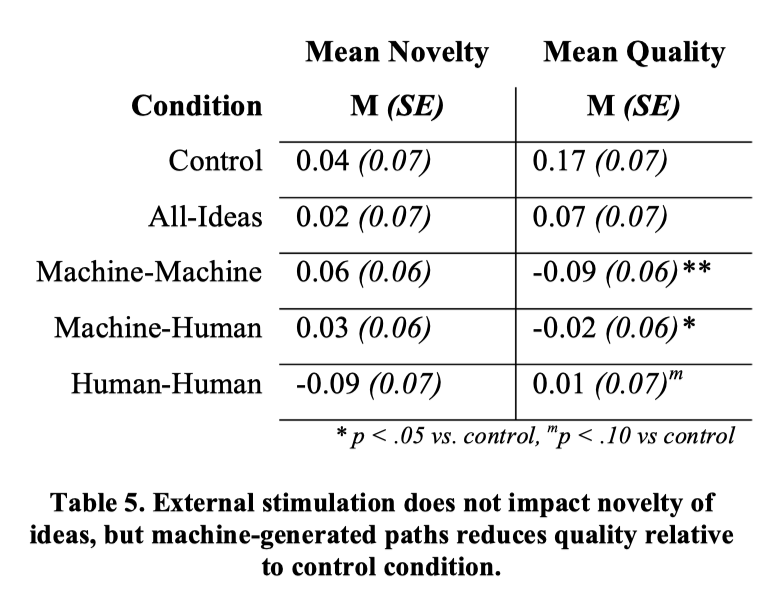

(Table 5)

(Table 5)

Grounding Context

- Who::

We recruited 139 mTurk workers (42% female, mean age = 32.6 years, SD = 9.6) for this study. To ensure quality data, all participants had to have approval rates of at least 95% with at least 100 completed HITs. Participants were paid $2.00 for about 20 minutes of participation (on average), yielding an average hourly rate of $6/hour. (p. 2721)

- How::

- machine-generated clusters, machine-generated labels, dataset

We extracted solution paths for ideas for two problems: 1) new product ideas for a novel “fabric display” (fabric display problem), and 2) ideas for improving workers’ experience performing HITs on mTurk on mobile devices (improve mTurk problem). Both idea datasets were assembled from idea datasets collected in prior studies ([54] for the Fabric Display problem, and [40] for the Improve Turk problem). The ideas in those datasets were collected from mTurk workers, and the authors shared the data with us. We randomly sampled 120 ideas for each problem. (p. 2719)

In the cluster phase, ideas are first tokenized, removing uninformative (stop) words, and then weighted using TFIDF. Each idea then is re-represented as a vector of w TFIDF weights (where w is the number words in the corpus of ideas). We then use LSI to reduce the dimensionality of the vectors. Based on prior experience clustering brainstorming ideas, our intuition is that somewhere between 15 to 25 patterns is often sufficient to adequately describe emerging solution patterns. Therefore, we set our LSI model to retain 15, 20 and 25 dimensions. For each of these parameter settings, we then identify solution paths by finding the same number of clusters of ideas (i.e., 15, 20 or 25) with the Kmeans clustering algorithm. We use the LSI dimension weights as features for the ideas. (p. 2720)

For the label phase, we again select a method that would not require significant parameter tuning. To do this, we treat each cluster as a document, and compute TF-IDF weights for all words. We then choose the top n words with the highest TF-IDF weights within each cluster. N is determined by the average length of human labels (minus stopwords). (p. 2720)

- task(s)

two problems: 1) new product ideas for a novel “fabric display” (fabric display problem), and 2) ideas for improving workers’ experience performing HITs on mTurk on mobile devices (improve mTurk problem). (p. 2719)

- procedure

Participants generated ideas using a simple ideation interface. Inspirations (whether ideas or solution paths) were provided to participants in an “inspiration feed” in the right panel of their interface (see Figure 2). Participants could “bookmark” particular inspirations that they found helpful. No sorting and filtering options were provided. The limited sorting/filtering available in the all-ideas condition might be considered primitive, but it is actually similar to many existing platforms that might provide rudimentary filtering by very broad, pre-defined categories (that may be reused across problem). Participants in the control condition generated ideas with a simpler ideation interface that removed the inspiration feed. (p. 2721)

After providing informed consent, participants experienced a brief tutorial to familiarize themselves with the interface. Embedded within the tutorial was an alternative uses task (where participants were asked to think of as many alternative uses as possible for a bowling pin). generated ideas for both the Fabric Display and Improve Turk problems. Participants were given 8 minutes to work on each problem, and the order of the problems was randomized across participants. Participants in the inspiration conditions received the following instructions regarding the inspirations: “Below are some inspirations to boost your creativity. Feel free to create variations on them, elaborate on them, recombine them into new ideas, or simply use them to stimulate your thinking. If you find an inspiration to be helpful for your thinking, please let us know by clicking on the star button! This will help us provide better inspirations to future brainstormers.” After completing both problems, participants then completed a brief survey with questions about demographics and the participants’ experiences during the task.Participants then (pp .2721-2722)

- machine-generated clusters, machine-generated labels, dataset

- What:: ((pvSG_OWlN))

414 workers from mTurk rated the ideas for novelty and quality (p. 2722)

Quality was operationalized as the degree to which an idea would be useful for solving the problem, assuming it was implemented (i.e., separating out concerns over feasibility), ranging from a scale of 1 (Not Useful at All) to 7 (Extremely Useful). Each worker rated a random sample of approximately 20 ideas. (p. 2722)

While the raw inter-rater agreement was relatively low (Krippendorff’s alpha = .23 and .25, respectively), the overall aggregate measure had acceptable correspondence with each judges’ intuitions. Computing correlations between each judges’ ratings and the overall aggregate score yielded average correlations of .52 for novelty and .58 for quality. (p. 2722)

we normalized scores within raters (i.e., difference between rating and mean rating provide by rater, divided by the standard deviation of the raters’ ratings). (p. 2723)

- Who::